The Optional Driver

The curb is where a city rehearses its character. A bus leans into the lane with a practiced entitlement. A delivery van claims the hydrant because the schedule insists. A cyclist skims past a door that might open. Above it all floats the clean, bright promise of the ride hailing app: press, wait, go.

For a decade, that promise has depended on a person. Not the abstract “driver” of corporate presentations, but a human being with a stubborn mental map: which side street is blocked by construction, which left turn is technically illegal, which crosswalk belongs to pedestrians only in theory. Uber turned that person into an on demand utility. The phone became a dispatch radio for the twenty first century.

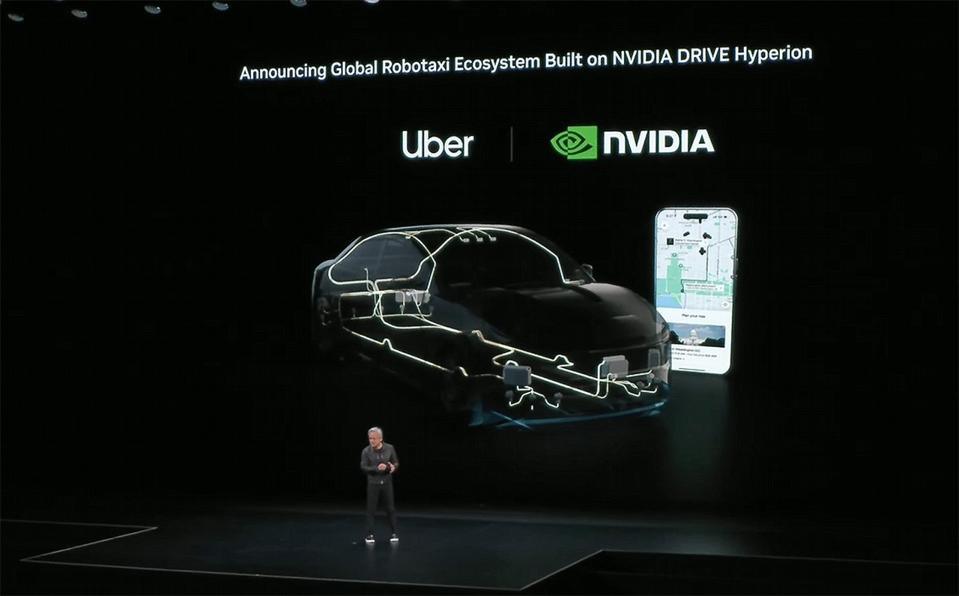

This fall, in Washington, D.C., Uber and NVIDIA offered a different picture. They announced a partnership meant to make what NVIDIA called a “robotaxi ready” world, scaling a ride hailing network that includes Level 4 autonomous vehicles built on NVIDIA’s DRIVE AV software and its DRIVE AGX Hyperion 10 platform. Uber said it will begin scaling an autonomous fleet starting in 2027 and is targeting 100,000 vehicles over time.

The future arrives with a bureaucratic label. “Level 4” comes from the Society of Automotive Engineers taxonomy, which divides driving automation into numbered steps. Level 4, “High Driving Automation,” means the automated system can handle the driving task within defined conditions and does not rely on a human to serve as a fallback. The definition contains its own caution: it is powerful inside its operating domain, and honest about where that domain ends.

NVIDIA’s move is to treat that power as something that can be packaged. Not as a single car, and not as a proprietary trick, but as a reference architecture. Hyperion 10 is NVIDIA’s attempt at a standard kit for autonomy: compute, sensors, board design, and operating system presented as a foundation that automakers and autonomy developers can build on. NVIDIA describes it as a production platform that can make vehicles “Level 4 ready.”

The platform is, in part, a list. Fourteen high definition cameras. Nine radars. One lidar. Twelve ultrasonic sensors. Each item is a hedge against the world’s refusal to cooperate. Rain ruins a camera. Glare flattens contrast. A truck blocks a line of sight. Sensors overlap so the system can keep seeing when one sense is compromised, the way a cautious pedestrian listens when they cannot look.

Then comes the compute, the part that turns perception into action. NVIDIA says Hyperion 10 uses two DRIVE AGX Thor in vehicle platforms based on its Blackwell architecture, with each delivering more than 2,000 FP4 teraflops and 1,000 TOPS of INT8 compute, designed for transformer workloads and “vision language action” models. The pitch is that the car will not merely detect an object but interpret a scene. It will recognize the difference between a stroller and a shopping cart, between a pedestrian waiting and a pedestrian stepping.

Uber’s contribution is not a sensor suite. It is the messy, expensive reality of demand. People want rides at 6:15 on a wet morning. They cancel. They change destinations. They want to be picked up on the wrong side of the street. Uber has spent years building a machine for matching those desires to supply, and the company now wants autonomous vehicles to become another kind of supply within the same marketplace. In the announcement, Uber described a unified network that includes human drivers and robot drivers, presented to riders as one service.

It is a careful framing, because robotaxis do not arrive everywhere. They arrive in pieces. They start in bounded zones where mapping is feasible and regulations can be negotiated, where the weather and the road geometry do not constantly invent new surprises. The image is futuristic, but the rollout is municipal.

NVIDIA and Uber described the scaling problem in the language of industry. They said they will build a joint “AI data factory” on NVIDIA Cosmos, a platform NVIDIA presents as a foundation model system for physical AI, curating and processing the data needed for autonomous vehicle development. The phrase is blunt, almost honest. Autonomy, in its current phase, is manufacturing. Raw experience goes in. Trained behavior comes out. Miles become product.

That manufacturing requires partners who build actual vehicles and actual driving stacks. NVIDIA’s announcement reads like a seating chart for an industry that has spent years reorganizing itself. Stellantis, Lucid, and Mercedes-Benz are named as collaborators for Level 4 ready vehicles compatible with Hyperion 10. Aurora, Volvo Autonomous Solutions, and Waabi are positioned on the freight side. The broader ecosystem list includes Avride, May Mobility, Momenta, Nuro, Pony.ai, Wayve, and WeRide.

Uber, for its part, has been collecting robotaxi relationships the way a port collects shipping lines. In May, Uber and Momenta said they would bring Momenta powered autonomous vehicles onto the Uber platform in markets outside the U.S. and China, starting in Europe in 2026 with onboard safety operators. In July, Lucid described a plan with Uber and Nuro to deploy more than 20,000 Level 4 autonomous vehicles over several years, again accessed through Uber. The NVIDIA partnership extends the idea upward, away from one autonomy stack and toward a common substrate.

Safety sits over everything like a law of physics. NVIDIA introduced a Halos Certified Program, along with an inspection lab focused on AI safety and cybersecurity, meant to evaluate and certify systems for autonomous vehicles and robotics. In the autonomy world, reassurance has to be engineered, documented, audited, and, eventually, believed.

Belief is not purely technical. It is also competitive. Waymo, Alphabet’s autonomous driving unit, has been reported to be in discussions to raise substantial funding at a valuation above $100 billion while expanding its driverless ride hailing operations to more markets, with reports describing large weekly ride volumes across several U.S. cities. That is what success looks like now: not a polished demo, but an operating service that endures boredom, traffic, and the casual cruelty of rush hour.

NVIDIA’s bet is that the winning system will resemble an ecosystem, with a standard platform underneath it. If Hyperion becomes the default skeleton for Level 4 vehicles, then the argument about autonomy shifts. It becomes less about whether the technology is possible and more about who controls the interfaces, the certification pathways, the update mechanisms, the economics of sensors, the pipelines that turn street life into training data.

A rider will not think in those terms. A rider will think in minutes. They will watch the little car icon approach. They will step toward the curb when it arrives. If the front seat is empty, they may hesitate, then climb in anyway, because the city has trained them to accept new arrangements so long as they are punctual.

The curb will keep staging its small dramas. The cyclist will still skim past. The delivery van will still block the view. And somewhere inside the vehicle, the moment will be rendered into signals and probabilities, the street translated into a kind of machine legibility, as if the city itself were being taught, one pickup at a time, how to drive.

NVDA 0.00%↑

UBER 0.00%↑

Disclaimer:

All views expressed are my own and are provided solely for informational and educational purposes. This is not investment, legal, tax, or accounting advice, nor a recommendation to buy or sell any security. While I aim for accuracy, I cannot guarantee completeness or timeliness of information. The strategies and securities discussed may not suit every investor; past performance does not predict future results, and all investments carry risk, including loss of principal.

I may hold, or have held, positions in any mentioned securities. Opinions herein are subject to change without notice. This material reflects my personal views and does not represent those of any employer or affiliated organization. Please conduct your own research and consult a licensed professional before making any investment decisions.