The Semiconductor Divergence: ASE Technology’s FOPLP Revolution vs. Cerebras’ Monolithic Wafer-Scale Engine

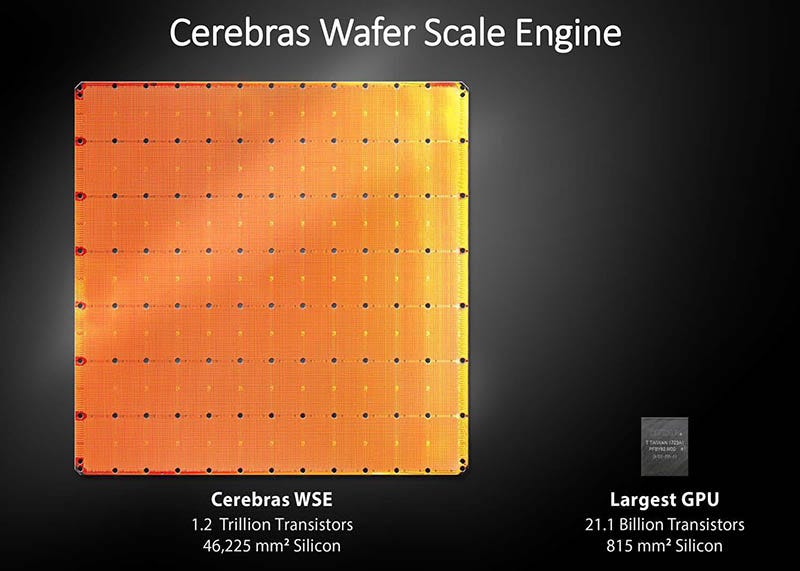

The semiconductor industry is approaching the physical boundaries of Moore’s Law. This has created a fundamental split in how companies build the computational engines needed for the Artificial Intelligence era. The industry faces the constraints of the “reticle limit.” This is the maximum size a single chip can be printed using lithography, which is approximately 858 mm². These limits have forced two very different strategies.

On one side stands ASE Technology Holding. They are the world’s largest OSAT (Outsourced Semiconductor Assembly and Test) provider. ASE champions a modular, industrialized approach using Fan-Out Panel-Level Packaging (FOPLP). On the other side is Cerebras Systems. They ignore the reticle limit entirely to produce the Wafer-Scale Engine (WSE). This is a monolithic processor the size of a dinner plate. This article analyzes ASE’s growth, contrasts the trade-offs of FOPLP against Wafer-Scale Integration, and looks at the plans of industry giants AMD and NVIDIA.

The ASE Growth Story: The “Kingmaker” of the AI Economy

ASE Technology Holding has evolved from a backend service provider into a strategic partner essential to the AI supply chain. Chipmakers are transitioning from monolithic System-on-Chips to heterogeneous “chiplets.” This shift makes advanced packaging the primary driver of system-level performance.

ASE’s dominance shows in its market position. The company commands an estimated 35 to 40% share of the global assembly and test market. The explosion in AI demand pushed ASE’s capacity utilization to nearly 90% in 2025. This gave the company significant pricing power. Reports indicate that ASE is preparing to raise backend packaging prices by 5 to 20% in early 2026. This move will offset rising costs and capitalize on the scarcity of advanced packaging capacity.

The Technological Pivot: FOPLP

ASE’s growth comes from its transition from wafer-level processing to panel-level processing. Traditional packaging uses circular 300mm silicon wafers. This shape results in wasted space at the edges, keeping area utilization below 60%. ASE’s FOPLP utilizes large rectangular panels, such as 600mm by 600mm. This geometry increases area utilization to 95% and allows for the simultaneous processing of significantly more chips. This strategy reduces unit packaging costs by 20 to 30% compared to wafer-level alternatives.

Central to this is the FOCoS-Bridge technology. By embedding small silicon bridges within the fan-out structure, ASE allows multiple ASICs and High-Bandwidth Memory (HBM) stacks to communicate. They achieve a density rivaling monolithic silicon but at a fraction of the cost.

Cerebras Systems: The Monolithic Counter-Argument

While ASE breaks chips apart to reassemble them, Cerebras Systems asks why we should cut the wafer at all. The Cerebras WSE-3 preserves the entire 300mm silicon wafer as a single functional unit. This delivers 46,225 mm² of continuous silicon.

The WSE-3 contains 4 trillion transistors and 900,000 AI-optimized cores. Its primary advantage is the elimination of the “memory wall.” Cerebras places 44GB of SRAM directly on the wafer next to the compute cores. This allows the WSE-3 to achieve 21 PB/s of memory bandwidth. That figure is roughly 7,000 times greater than an NVIDIA H100 GPU. Data stays on the chip, which drastically reduces the latency and power consumption associated with moving data between separate GPUs in a cluster.

Historically, wafer-scale integration was impossible because a single defect would ruin the whole wafer. Cerebras solved this by designing fine-grained redundancy. The chip uses uniform, tiny cores of 0.05 mm². If a core is defective, the hardware reroutes around it. This allows Cerebras to achieve 93% silicon utilization, essentially “healing” the wafer during manufacturing.

Comparative Analysis: Modular vs. Monolithic

The industry choice between ASE’s FOPLP and Cerebras’ WSE comes down to a “trinity” of needs: Cost, Latency, and Volume.

ASE’s philosophy is democratization. They focus on low cost and high volume. Their modular approach is the “workhorse.” It allows manufacturers to mix best-of-breed components, such as a 3nm GPU core with older, cheaper I/O dies. These packages fit into standard server racks. The trade-off is that despite silicon bridges, moving data between chiplets still incurs a latency penalty compared to monolithic silicon.

Cerebras focuses on performance. They aim for maximum speed and low latency. The WSE is the “Formula 1” car. Its on-wafer fabric offers unmatched connectivity with 214 Pb/s of bandwidth. For workloads requiring massive parameter counts and real-time inference, it is unbeatable. However, it is capital intensive. It requires specialized power delivery of up to 25kW per system and liquid cooling. This makes it difficult to integrate into standard data centers.

The Adoption Verdict: Will AMD and NVIDIA Follow?

The industry giants are voting with their R&D budgets. The results heavily favor the ASE strategy of modularity, though with some nuances.

AMD is currently the most aggressive adopter of FOPLP. They face supply bottlenecks with TSMC’s CoWoS (Chip-on-Wafer-on-Substrate). Consequently, AMD has partnered with ASE and other OSATs to integrate FOPLP into its Instinct MI400 and MI500 series GPUs. AMD is building the “Helios” rack-scale platform using FOPLP to connect thousands of accelerators. By securing panel-level capacity, AMD aims to fulfill massive orders. This includes their partnership to deploy 6 gigawatts of GPUs for OpenAI. AMD will not adopt the Cerebras wafer strategy because their entire CDNA architecture relies on the flexibility of chiplets.

NVIDIA currently dominates using TSMC’s CoWoS wafer-based packaging. However, NVIDIA has booked nearly 60% of TSMC’s capacity, creating a bottleneck for itself and others. NVIDIA is transitioning to its “Rubin” platform (R100) in 2026. While they currently rely on CoWoS, they are actively exploring panel-level packaging. TSMC reportedly calls this CoPoS. NVIDIA is also looking at glass substrates for deployment around 2027 to 2028. NVIDIA is unlikely to adopt the Cerebras strategy. Their business model depends on selling discrete GPUs that fit into a vast ecosystem of servers from Dell, HPE, and Supermicro. A wafer-scale engine would disrupt this supply chain and their proprietary NVLink/NVSwitch rack architecture.

Conclusion: A Bifurcated Future

The growth story of ASE Technology is one of industrialization. By successfully scaling FOPLP, ASE provides the “railroad tracks” for the AI economy. They enable AMD, NVIDIA, and Broadcom to bypass the reticle limit economically. The FOPLP market is projected to grow significantly as it becomes the standard for high-volume AI accelerators and consumer electronics.

Cerebras has carved out a defensible niche for the absolute highest tier of supercomputing. For “sovereign AI” clouds like G42, and for massive model training where time-to-solution is the only metric that matters, the WSE offers performance that modular chiplets cannot physically match.

Ultimately, the industry will not choose one over the other. It will use both for different ends. ASE will manufacture the millions of chips that run the world’s applications. Meanwhile, Cerebras will likely power the few dozen supercomputers that train the frontier models of tomorrow.

Disclaimer:

All views expressed are my own and are provided solely for informational and educational purposes. This is not investment, legal, tax, or accounting advice, nor a recommendation to buy or sell any security. While I aim for accuracy, I cannot guarantee completeness or timeliness of information. The strategies and securities discussed may not suit every investor; past performance does not predict future results, and all investments carry risk, including loss of principal.

I may hold, or have held, positions in any mentioned securities. Opinions herein are subject to change without notice. This material reflects my personal views and does not represent those of any employer or affiliated organization. Please conduct your own research and consult a licensed professional before making any investment decisions.